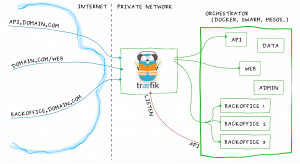

Some weeks ago, I stumble upon Træfik which is obviously not a new Tool album but a HTTP proxy server which has everything a highly dynamic Docker platform needs to expose its services and includes Let’s Encrypt silently – Such a thing doesn’t exist you say?

A brief summary:

Træfik is a single binary daemon, written in Go, lightweight and can be used in virtually any modern environment. Configuration is done by choosing a backend you have. This could be an orchestrator like Swarm or Kubernetes but you can also use a more “open” approach, like etcd, REST API’s or file backend (backends can be mixed of course). For example, if you are using plain Docker, or Docker Compose, Træfik uses Docker object labels to configures services. A simple configuration looks like this:

[docker] endpoint = "unix:///var/run/docker.sock" # endpoint = "tcp://127.0.0.1:2375" domain = "docker.localhost" watch = true

Træfik constantly watch for changes in your running Docker container and automatically adds backends to its configuration. Docker container itself only needs labels like this (configured as Docker Compose in this example):

whoami: image: emilevauge/whoami # A container that exposes an API to show its IP address labels: - "traefik.frontend.rule=Host:whoami.docker.localhost"

The clue is, that you can configure everything you’ll need that is often pretty complex in conventional products. This is for Example multiple domains, headers for API’s, redirects, permissions, container which exposes multiple ports and interfaces and so on.

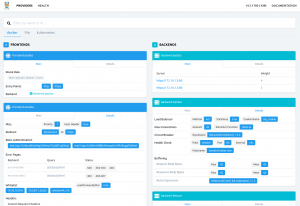

How you succeed with the configuration can be validated in a frontend which is included in Træfik.

However, the best thing everybody was waiting is the seamless Let’s Encrypt integration which can be achieved with this snippet:

[acme] email = "test@traefik.io" storage = "acme.json" entryPoint = "https" [acme.httpChallenge] entryPoint = "http" # [[acme.domains]] # main = "local1.com" # sans = ["test1.local1.com", "test2.local1.com"] # [[acme.domains]] # main = "local2.com" # [[acme.domains]] # main = "*.local3.com" # sans = ["local3.com", "test1.test1.local3.com"]

Træfik will create the certificates automatically. Of course, you have a lot of conveniences here too, like wildcard certificates with DNS verification though different DNS API providers and stuff like that.

To conclude that above statements, it exists a thing which can meet the demands for a simple, apified reverse proxy we need in a dockerized world. Just give it a try and see how easy microservices – especially TLS-encrypted – can be.