We use Request Tracker on a daily basis, and have written many extensions for our own workflows and visualizations. Lately we’ve been helping a customer to migrate from OTRS to RT running in NWS, and learned about new ways to improve our workflows.

Highlight Search Results on Conditions

When you own a ticket, but someone else updated the ticket with a comment/reply, you want to immediately see this. Our extension makes this possible with either a background color or an additional icon (or both).

You can also limit this to replies/comments from customers, where the last update wasn’t performed by users in a specific group. This allows to immediately see support or sales tickets which need to be worked on in the dashboards.

Another use case is to highlight search result rows when a custom field matches a specified value. If you’re setting tickets for example, you can visually see the difference between a „bought ticket“ and „paid ticket“ state.

While developing the extension, I’ve also fixed an upstream RT bug which has been merged for future releases. There’s even more possibilities, as we’ve recognised that one of the BestPractical/RT developers forked our extension already 🙂

Quick Assign People to Tickets

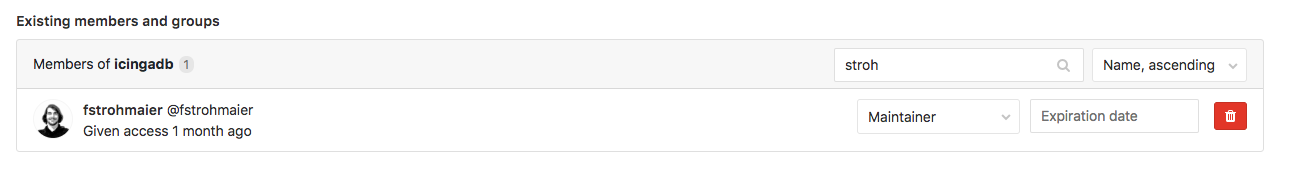

By default, one needs to edit the „People“ tab to assign a ticket to a privileged user, or modify adminCC and the requestor. This takes far too long and as such, our own NETWAYS extension improved this with drop-downs and action buttons. We have now open-sourced this feature set into a new extension on GitHub: rt-extension-quickassign.

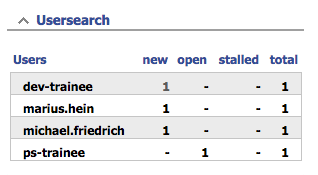

Show Ticket Count per User and Status

Do you need more customizations for Request Tracker, or want to run RT in a managed cloud environment? Just get in touch 🙂