Scherz beiseite, die Go-Entwickler bieten noch keine Möglichkeit, C++-Bibliotheken ohne weiteres anzusprechen. Aber es geht ja auch mit weiteres. Das weitere besteht darin, dass C++-Funktionen mittels C-Bibliotheken gewrapped werden können. Und in meinem letzten Blogpost zu diesem Thema habe ich bereits erklärt, wie C-Bibliotheken in Go angesprochen werden können. Sprich, es braucht nur eine hauchdünne C-Schicht zwischen C++ und Go.

Multilingual++ in der Praxis

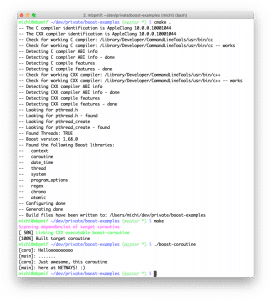

Wie auch letztes mal habe ich schon mal was vorbereitet – eine Schnittstelle für die Boost.Regex-Bibliothek. Diese findet sich in diesem GitHub-Repo und besteht aus folgenden Komponenten:

- Ein Struct, das

boost::basic_regex<char>wrapped - Eine C-Bibliothek, die den C++-Teil wrapped

- Die Go-Bibliothek, die die C-Bibliothek verwendet

Wrapper-Struct

Dieses Struct ist Notwendig, da die Größe von boost::basic_regex<char> zwar C++ bekannt ist, aber nicht Go. Das Wrapper-Struct hingegen hat eine feste Größe (ein Zeiger).

libcxx/regex.hpp

[c language=”++”]

#pragma once

#include <boost/regex.hpp>

// boost::basic_regex

// boost::match_results

// boost::regex_search

#include <utility>

// std::forward

// std::move

template<class Char>

struct Regex

{

template<class… Args>

inline

Regex(Args&&… args) : Rgx(new boost::basic_regex<Char>(std::forward<Args>(args)…))

{

}

Regex(const Regex& origin) : Rgx(new boost::basic_regex<Char>(*origin.Rgx))

{

}

Regex& operator=(const Regex& origin)

{

Regex copy (origin);

return *this = std::move(copy);

}

inline

Regex(Regex&& origin) noexcept : Rgx(origin.Rgx)

{

origin.Rgx = nullptr;

}

inline

Regex& operator=(Regex&& origin) noexcept

{

this->~Regex();

new(this) Regex(std::move(origin));

return *this;

}

inline

~Regex()

{

delete this->Rgx;

}

template<class Iterator>

bool MatchesSomewhere(Iterator first, Iterator last) const

{

boost::match_results<Iterator> m;

return boost::regex_search(first, last, m, *(const boost::basic_regex<Char>*)this->Rgx);

}

boost::basic_regex<Char>* Rgx;

};

[/c]

Eine C-Bibliothek, die den C++-Teil wrapped

Die folgenden Funktionen sind zwar waschechte C++-Funktionen, aber dank dem extern "C" werden sind sie in der Bibliothek als C-Funktionen sichtbar und können von Go angesprochen werden.

libcxx/regex.cpp

[c language=”++”]

#include "regex.hpp"

// Regex

#include <boost/regex.hpp>

using boost::bad_expression;

#include <stdint.h>

// uint64_t

#include <utility>

using std::move;

extern "C" unsigned char CompileRegex(uint64_t pattern_start, uint64_t pattern_end, uint64_t out)

{

try {

*(Regex<char>*)out = Regex<char>((const char*)pattern_start, (const char*)pattern_end);

} catch (const boost::bad_expression&) {

return 2;

} catch (…) {

return 1;

}

return 0;

}

extern "C" void FreeRegex(uint64_t rgx)

{

try {

Regex<char> r (move(*(Regex<char>*)rgx));

} catch (…) {

}

}

extern "C" signed char MatchesSomewhere(uint64_t rgx, uint64_t subject_start, uint64_t subject_end)

{

try {

return ((const Regex<char>*)rgx)->MatchesSomewhere((const char*)subject_start, (const char*)subject_end);

} catch (…) {

return -1;

}

}

[/c]

libcxx/regex.h

[c language=”++”]

#pragma once

#include <stdint.h>

// uint64_t

unsigned char CompileRegex(uint64_t pattern_start, uint64_t pattern_end, uint64_t out);

void FreeRegex(uint64_t rgx);

signed char MatchesSomewhere(uint64_t rgx, uint64_t subject_start, uint64_t subject_end);

[/c]

Go-Bibliothek

Diese spricht letztendlich die C-Funktionen an. Dabei übergibt sie die Zeiger als Ganzzahlen, um gewisse Sicherheitsmaßnahmen von CGo zu umgehen. Das Regex-Struct entspricht dem Regex-Struct aus dem C++-Teil.

regex.go

[c language=”go”]

package boostregex2go

import (

"io"

"reflect"

"runtime"

"unsafe"

)

/*

#include "libcxx/regex.h"

// CompileRegex

// FreeRegex

// MatchesSomewhere

*/

import "C"

type OOM struct {

}

var _ error = OOM{}

func (OOM) Error() string {

return "out of memory"

}

type BadPattern struct {

}

var _ error = BadPattern{}

func (BadPattern) Error() string {

return "bad pattern"

}

type Regex struct {

rgx unsafe.Pointer

}

var _ io.Closer = (*Regex)(nil)

func (r *Regex) Close() error {

C.FreeRegex(rgxPtr64(r))

return nil

}

func (r *Regex) MatchesSomewhere(subject []byte) (bool, error) {

defer runtime.KeepAlive(subject)

start, end := bytesToCharRange(subject)

switch C.MatchesSomewhere(rgxPtr64(r), start, end) {

case 0:

return false, nil

case 1:

return true, nil

default:

return false, OOM{}

}

}

func NewRegex(pattern []byte) (*Regex, error) {

rgx := &Regex{}

defer runtime.KeepAlive(pattern)

start, end := bytesToCharRange(pattern)

switch C.CompileRegex(start, end, rgxPtr64(rgx)) {

case 0:

return rgx, nil

case 2:

return nil, BadPattern{}

default:

return nil, OOM{}

}

}

func bytesToCharRange(b []byte) (C.uint64_t, C.uint64_t) {

sh := (*reflect.SliceHeader)(unsafe.Pointer(&b))

return C.uint64_t(sh.Data), C.uint64_t(sh.Data + uintptr(sh.Len))

}

func rgxPtr64(p *Regex) C.uint64_t {

return C.uint64_t(uintptr(unsafe.Pointer(p)))

}

[/c]

Fazit++

Wenn etwas abgedrehtes mal nicht zu gehen scheint, dann gebe ich doch nicht auf, sondern ich mache es einfach noch abgedrehter. Impossible is nothing.

Wenn Du auch lernen willst, wie man unmögliches möglich macht, komm auf unsere Seite der Macht.