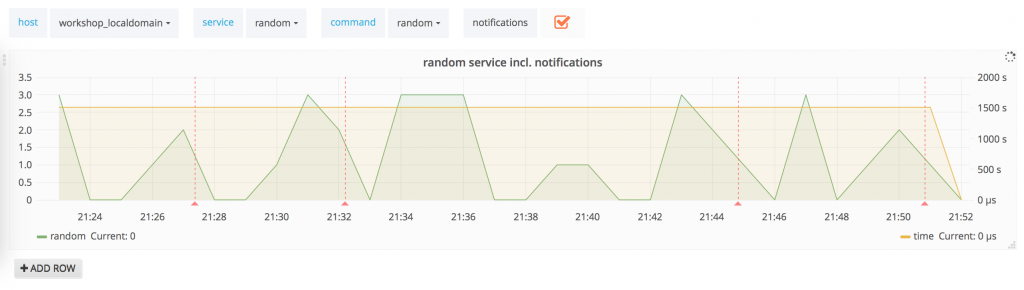

Last year, Blerim told us how to benchmark a Graphite and now we want to share some experience on how to handle the emerging IOPS with its pro and contra. It is important to know that Graphite can store its metrics in 3 different ways (CACHE_WRITE_STRATEGY). Each one of them influences another system resource like CPU, RAM or IO. Let us start with an overview about each option and its function.

Last year, Blerim told us how to benchmark a Graphite and now we want to share some experience on how to handle the emerging IOPS with its pro and contra. It is important to know that Graphite can store its metrics in 3 different ways (CACHE_WRITE_STRATEGY). Each one of them influences another system resource like CPU, RAM or IO. Let us start with an overview about each option and its function.

max

The cache will keep almost all the metrics in memory and only writes the one with the most datapoints. Sounds nice and helps a lot to reduce the general and random io. But this option should never be used without the WHISPER_AUTOFLUSH flag, because most of your metrics are only available in memory. Otherwise you have a high risk of losing your metrics in cases of unclean shutdown or system breaks.

The disadvantage here is that you need a strong CPU, because the cache must sort all the metrics. The required CPU usage increases with the amount of cached metrics and it is important to keep an eye on enough free capacity. Otherwise it will slow down the processing time for new metrics and dramatically reduce the rendering performance of the graphite-web app.

naive

This is the counterpart to the max option and writes the metrics randomly to the disk. It can be used if you need to save CPU power or have fast storage like solid state disk, but be aware that it generates a large amount of IOPS !

sorted

With this option the cache will sort all metrics according to the number of datapoints and write the list to the disk. It works similar to max, but writes all metrics, so the cache will not get to big. This helps to keep the CPU usage low while getting the benefit of caching the metrics in RAM.

All the mentioned options can be controlled with the MAX_UPDATES_PER_SECOND, but each one will be affected in its own way.

Summary

At the end, we made use of the sorted option and spread the workload to multiple cache instances. With this we reduced the amount of different metrics each cache has to process (consistent-hashing relay) and find the best solution in the mix of MAX_UPDATES_PER_SECOND per instance to the related IOPS and CPU/RAM usage. It may sound really low, but currently we are running 15 updates per second for each instance and could increase the in-memory-cache with a low CPU impact. So we have enough resources for fast rendering within graphite-web.

I hope this post can help you in understanding how the cache works and generates the IOPS and system requirements.

Hello! Your long wait is over and finally

Hello! Your long wait is over and finally