About the OpenInfra Summit 2022

This year’s OpenInfra Summit takes place in Berlin. After two long years it is now possible again to take part in person and meet other developers, users and many people involved in the community.

The summit features talks about a wide variety of topics related to open source infrastructure technologies, ranging from OpenStack and container orchestration like Kubernetes to many other interesting topics like Machine Learning, CI/CD, Hardware Enablement, Edge Computing and NFV.

Who is going?

Since OpenStack and Kubernetes are already part of the NWS product portfolio, our Web Services team members are always interested in news around those topics!

Therefore some of our team members will be present at the summit. This year will also be the first time that NETWAYS Web Services joins the OpenInfra Summit as a Supporting Sponsor!

Will you be at #OpenInfra Summit Berlin next week, too?

NWS is attending as a sponsor and excited to meet lots of #infrastructure enthusiasts interested in #OpenStack, #Kubernetes and other #OpenSource #technologies.

Hope to see you around at @openinfradev! pic.twitter.com/IroBxTLdjh— NETWAYS Web Services (@NetwaysCloud) June 2, 2022

As members of the NETWAYS Web Services team, Sebastian Saemann, Achim Ledermüller and me, Gabriel Hartmann will be on site. On top of that also Bernd Erk, the CEO of NETWAYS will partake.

What to expect?

There will be people from all over the world joining the summit. It will be an opportunity to meet like-minded, interesting people and to exchange opinions, discuss experiences or just to enjoy a chat with them.

The schedule already reveals that there will be many top engineers, CTOs and developers from all sorts of companies giving insights into their experiences, some best practices and also showing off the latest improvements and advancements that have been recently made on the projects. A lot of interesting topics will be covered – there is surely something for everyone!

I’m already excited to go there and can’t wait to learn many new things regarding OpenStack, Kubernetes, CI/CD and much more.

Me and my colleagues are also thrilled that we can join the summit in person! Having the chance to meet other people face to face is just something different and also watching keynotes and demos live on site is always exciting. Will you be there, too?

Follow us on twitter to get some first hand impressions of our experience at the OpenInfra Summit 2022!

Once you are

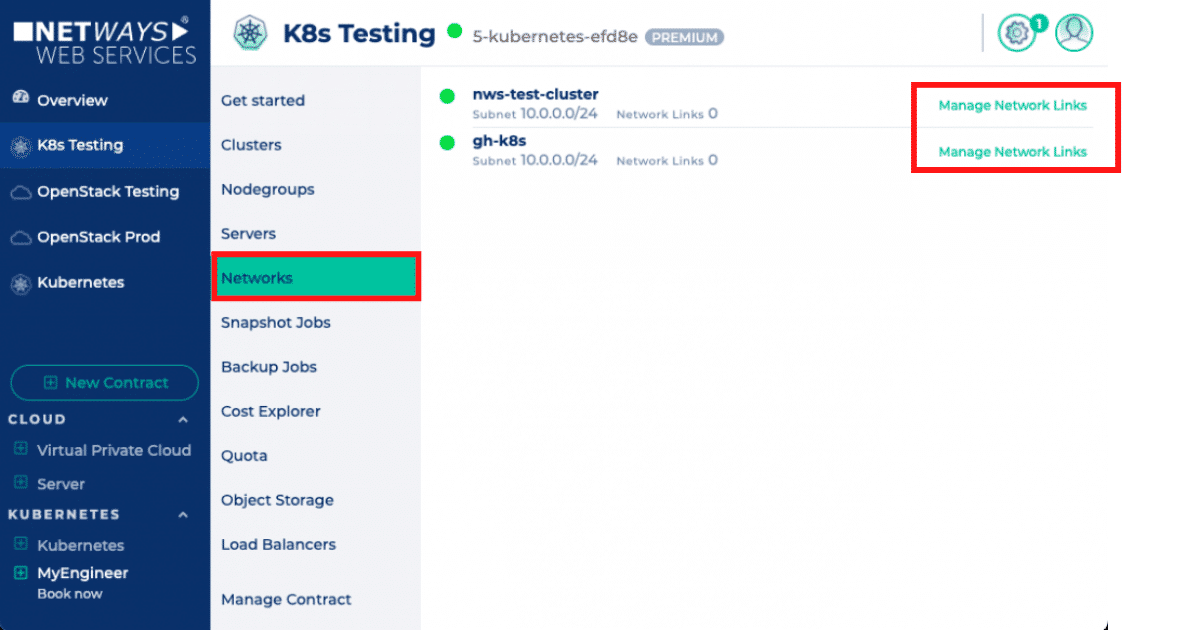

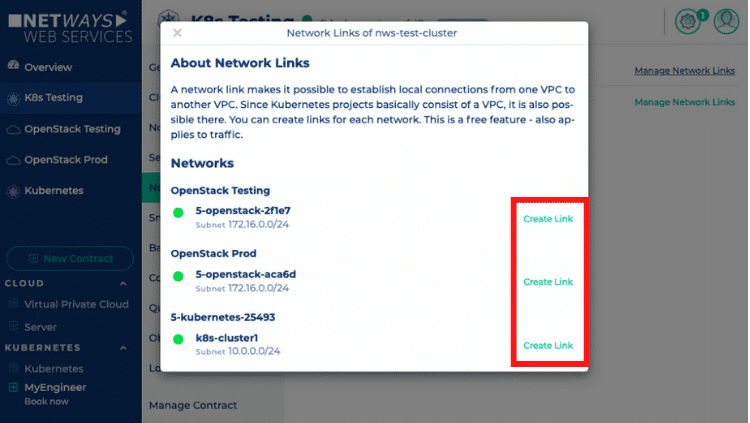

Once you are The networks are grouped by the projects they belong to. For each network you now have an option to create a link, as seen in the screenshot. If you have chosen a network, which you would like to link to the current network, you just have to click on the “Create Link” button next to it.

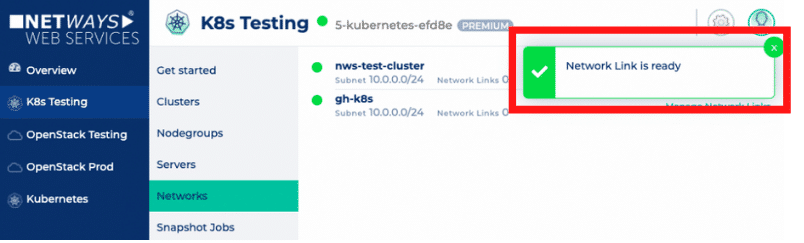

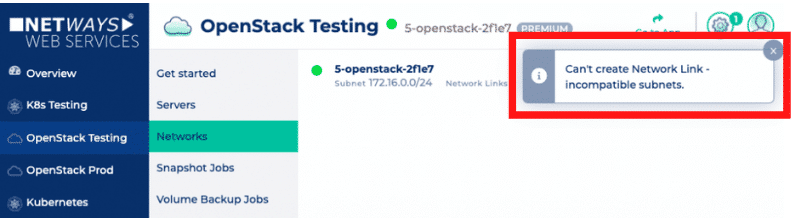

The networks are grouped by the projects they belong to. For each network you now have an option to create a link, as seen in the screenshot. If you have chosen a network, which you would like to link to the current network, you just have to click on the “Create Link” button next to it. Only, if both of those requirements are met, it will be possible to route between the networks and the job will start. Once it’s finished, you’ll get notified.

Only, if both of those requirements are met, it will be possible to route between the networks and the job will start. Once it’s finished, you’ll get notified.

Team oriented Instant-Messaging has become quite popular in recent years with Slack. Its success is undeniable: more than 6 million people use Slack every day.

Team oriented Instant-Messaging has become quite popular in recent years with Slack. Its success is undeniable: more than 6 million people use Slack every day.