Wow, it was an outstanding stackconf 2022! Many thanks to all the organizers, speakers and participants who made it possible throughout three days full of Open Source Infrastructure Love. I am truly blessed to have been a part of this amazing event!

For all of you who would like to catch up on the specialist lectures of this special event, all the talks are available for you on YouTube. My blogpost today is all about Ricardo Castro and his talk ”Network Service Mesh”. Due to personal circumstances, he was unfortunately prevented from being present on site and sharing his expertise with us in person. Nevertheless, he was able to record his talk in advance and send it to us. So, I am going to recap his talk for you in this blog and give some insights into my findings.

Why Network Service Mesh?

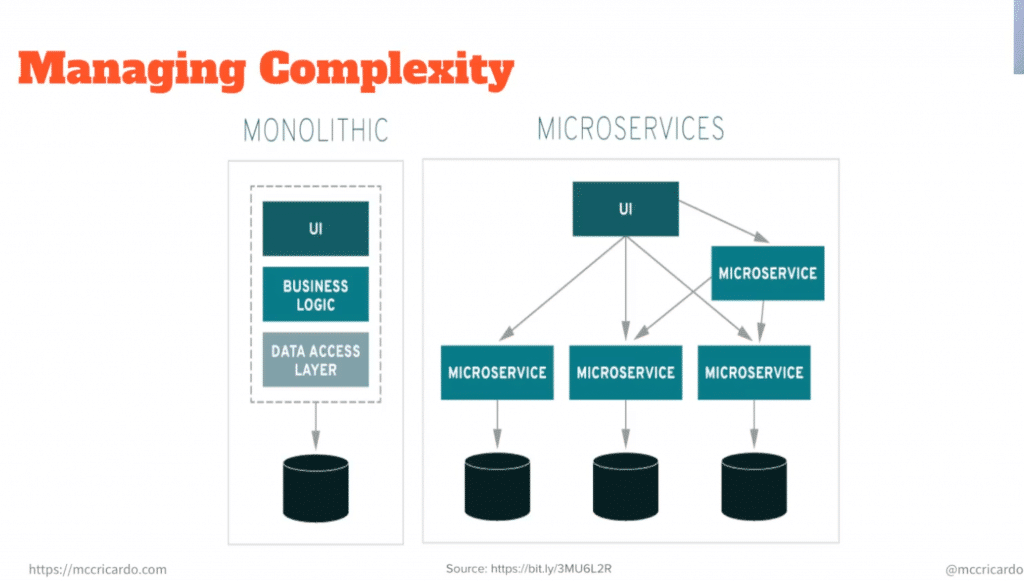

Microservice architectures build applications as a collection of loosely coupled services. In this type of architecture, the goal is for the services to be fine-grained. The main adjective for teams is that the services are independent of other services. By building loosely coupled services, the types of dependencies and associated complexities are eliminated. Reliably managing network connectivity on distributed systems brings its own set of challenges. Things like service discovery, load balancing, fault tolerance, metrics collection, and security are some examples of issues that arise with distributed systems.

In short, how do you facilitate workload collaboration to create an application on the fly that communicates regardless of where those workloads are running? Ricardo is going to answer this and plenty of other questions for us below, so prick up your ears!

Traditional Service Meshes

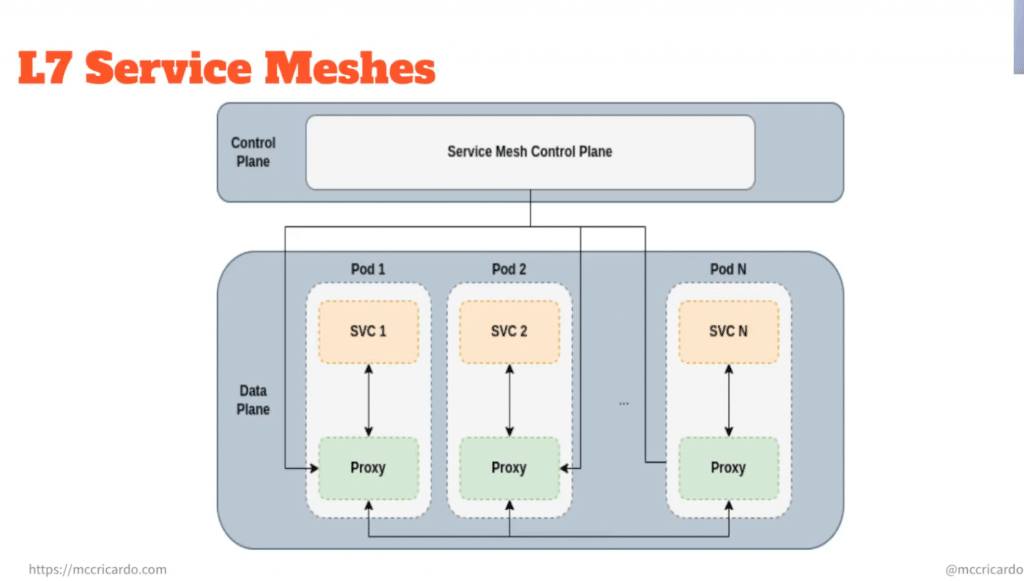

A service mesh is a connective tissue between all of your services. It adds additional capabilities such as traffic control, service discovery, load balancing, resilience, observability, security, etc. A service network allows applications to offload these capabilities from application-level libraries. A service mesh typically consists of two components, the data plane and the control plane. The service mesh data plane is made up of business services along side the proxies, which are responsible for all the traffic. The service mesh control plane comprises a set of services providing administrative functions necessary for the control of the service mesh. There are many of the service mesh implementations that can help you in many ways. Here you can see a few of them:

Service meshes connectivity work well within a runtime domain, but often workloads need to interoperate with other services that are outside the runtime domain to provide full functionality. But what are runtime and connectivity domains?

A runtime domain is essentially a compute domain that runs workloads. Traditionally, there is exactly one connectivity domain in a runtime domain because connectivity domains are coupled with runtime domains. This means that only workloads that are within the runtime domain can be part of the connectivity domain. To truly take advantage of this approach, microservice components must at least be interdependent. Application service networks work well within the connectivity domain and their target Layer 7 such as https. They can help cloud-native workloads achieve loose coupling through functionality such as service discovery and fault tolerance. Ricardo has explored some of the use cases where traditional service meshes fail and where network service meshes can mitigate these challenges.

Where Do L7 Service Meshes Fall Short?

The first example is the Multi/Hybrid Cloud center. In this scenario, you have multiple distinct and independent cloud clusters that can be public, private or on-premises. The workloads in each of these clusters must have the ability to communicate with each other. In short, you have a number of workloads running in different connectivity domains that need to be connected together.

Another example is the Multi-Corp/Extra-Net scenario. In this scenario, workloads from different clusters also need to interconnect independent of the connectivity domains they are running in. The main difference between this and the hybrid cloud scenario is that you now have different domains of administrative control. This means that different organizations are managing their own runtime and connectivity domains. The workloads in each of these domains have to work with each other in some fashion.

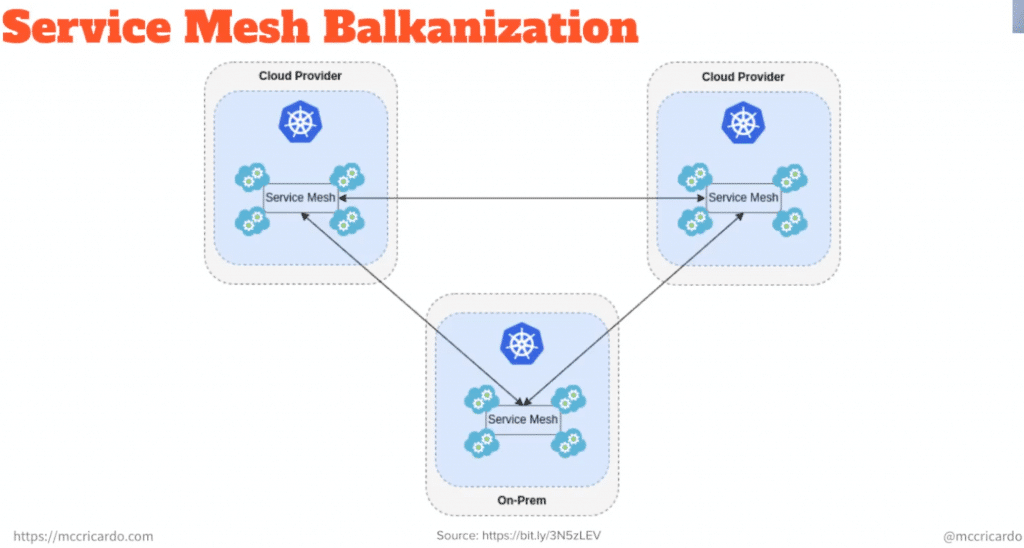

Service mesh balkanization is also another example of how traditional Layer 7 service meshes fall short. For example, traditional service meshes work well within the same Kubernetes cluster. When local service meshes need to collaborate, they are typically connected via Gateways. These are usually static Layer 7 routes that are very difficult to scale and maintain. However, traditional service meshes cannot guarantee reliable networking in such a scenario because they assume there is a reliable Layer 3 underneath them, which is usually the case within the same cluster, but cannot be guaranteed beyond that. Ricardo goes on to examine how network service meshes have tackled these challenges.

Network Service Mesh (NSM)

Before we dig in further here’s an excellent description, as Ricardo did, of what network service mesh actually is.

Network Service Mesh is a hybrid/multi-cloud IP service mesh that enables Layer 3 (L3), zero trusts, pure network service connectivity, security and observability. It works with your existing K8s Container Network Interface (CNI), provides pure workload granularity, requires no changes to K8s and no changes to your workloads. Network Service Mesh tackles all of the above mentioned challenges by enabling individual workloads to connect securely, regardless of where they are running. Your runtime domain provides connectivity between clusters and requires no intervention. Before we dive into the key concepts of network service meshes, here are some use cases where NSM can be very useful. Basically, these are the same examples where traditional service meshes fall short:

- A common Layer 3 domain that allows Databases (DB) running in multiple clusters, clouds, etc. to communicate with each other, such as DB replication.

- A single Layer 7 service mesh such as Kuma, Linkerd, etc., that connects workloads running in multiple clusters, clouds, etc.

- A single workload connecting to multiple Layer 7 service meshes.

- Workloads from multiple organizations connecting to a single collaborative service mesh that is accessible across organizations.

NSM Key Concepts

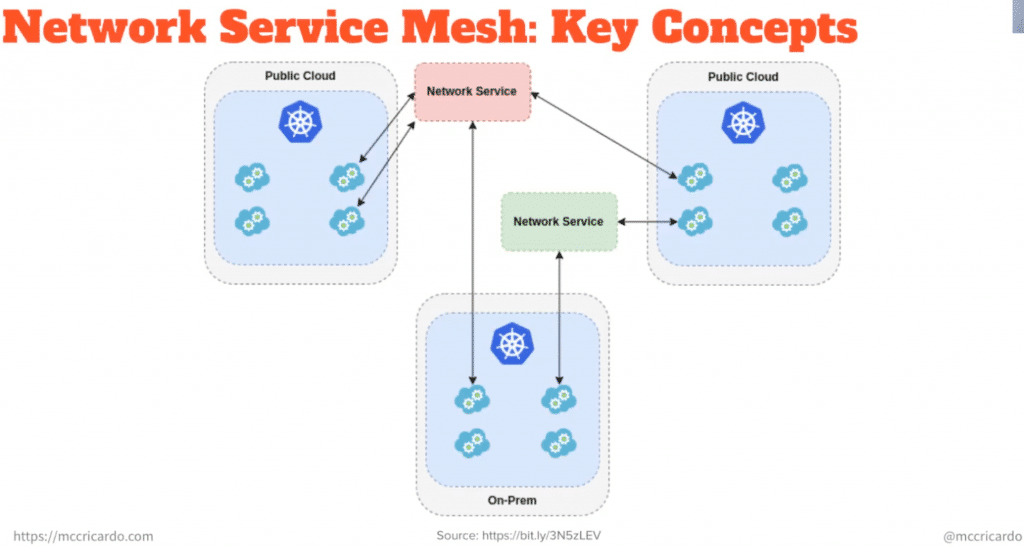

In a network service mesh, a network service is a collection of features that are applied to the network traffic. These functions provide connectivity, security and observability. A network service client is a workload that requires a connection to the network service by specifying the name of the network service. Clients are independently authenticated by Spiffe ID and must be authorized to connect to the requested network service. In addition to the network service name, a client may declare a set of key value pairs called labels. These labels can be used by the network service to select the proper endpoint. Or they can be used by the endpoint itself to influence the way it provides service to the client.

A network service mesh endpoint is the entry point to the network service for the client. The network service is identified by its name and carries a payload. Network services are registered in the network service register. In short, an endpoint can be a Pod running on the same or a different K8s cluster, an aspect of the physical network or anything else to which packets can be delivered for processing. A client and an endpoint are connected by a virtual wire (vWire). A vWire acts as a virtual connectivity link between clients and an endpoint. A client may also request the same network service multiple times and therefore multiple vWires can lead to the requested endpoint.

Network Service Mesh API

The Network Service Mesh API includes the Request, Close and Monitor functionality. A client can send a Request-GRPC call to the Network Service Mesh to establish a virtual circuit between the client and the network service. For closing a virtual wire between a client and the network service, a client can send a Close-GRPC call to the NSM. A vWire between a client and a network service always has a limited expiration time, so the client usually sends a new Request message to refresh its vWire. A client can also send a Monitor GRPC call to the NSM to obtain information about the status of a vWire it has to a Network Service. If a vWire exceeds its expiration time without being refreshed, the NSM cleans up that vWire.

Network Service Mesh Registry

Like any other mesh, the Network Service Mesh has a Registry in which network services and network service endpoints are registered. A network service endpoint provides one or more network services. It registers a list of network services by name in the registry, which it annotates, and the destination labels that it advertises for each network service. Optionally, a mesh service can specify a list of matches that allows matching with the source identifiers of the client sending its request to the destination labels advertising when it is registered by the endpoint. You can find a detailed description in the documentation or on YouTube, where Ricardo explains it very well in person.

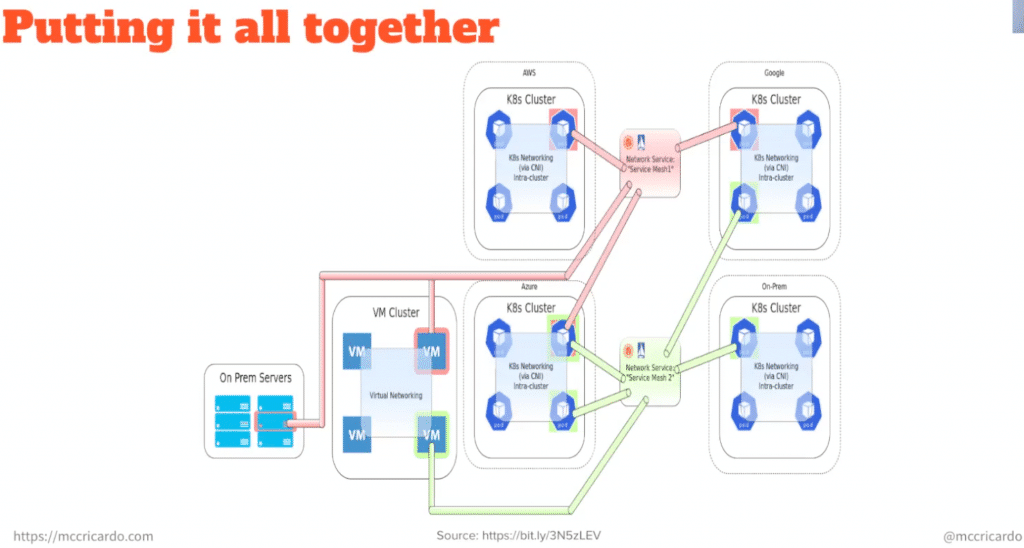

Well, how do you put it all together?

At a higher level, a Network Service Mesh registers one or more network services within the NSM. It registers that list by name and the destination labels that advertising each network service. A client can send a request to the network service to establish a vWire connectivity between the client and the network service. It can also close the connection, and since the vWire has an expiration time, a client can monitor its vWire state. The interesting thing about the NSM is that it allows workloads to request services regardless of where they are running. It is up to the NSM to create the necessary vWires and enable communication between workloads in a secure manner.

Conclusion

In general, Ricardo’s talk covers the issues that Network Service Mesh addresses and its key concepts, however there is much more to talk about, as he told us. He recommends you to look at Floating Inter-domain, where a client requests a network service from any domain in the network service registry, regardless of where it is running. It can also be valuable to take a look at some of the advanced features, as he encourages us to do. The Network Service Mesh Match process, which selects candidate endpoints for specific Network Service Meshes, can be used to implement a variety of advanced features such as Composition, Selective Composition, Topologically Aware Endpoint Selection, and Topologically Aware Scale from Zero. Also, another important note Ricardo shared with us is that Network Service Mesh has been part of the CNCF project since 2019 and is currently in the Sandbox maturity level. If you don’t know what CNCF is, it’s definitely worth to take a look at the referenced documentation.

It’s a very interesting and informative topic and I could learn a lot from Ricardo. I have never dealt with it before, but now it is quite simple to keep track after watching this great talk. I hope I was able to somehow reflect what Ricardo was talking about throughout the text. As I mentioned at the beginning, the recorded video of this lecture and all the other stackconf talks are available on YouTube! Enjoy it!

Take a look at our conference website to learn more about stackconf, check out the archives and register for our newsletter to stay tuned!

0 Kommentare