Das gestrige Open Source Camp für Kubernetes war ein Riesenerfolg! Von inspirierenden Keynotes bis hin zu lebhaften Diskussionen bot das Event eine Fülle an Wissen und Networking-Möglichkeiten. Highlights aus dem Programm Die Talks lieferten nicht nur Blicke...

NETWAYS Blog

Hier erfährst Du alles was uns bewegt. Technology, Hardware, das Leben bei NETWAYS, Events, Schulungen und vieles mehr.

OSCamp für Kubernetes 2024 – Rückblick auf ein inspirierendes Event

Das gestrige Open Source Camp für Kubernetes war ein Riesenerfolg! Von inspirierenden Keynotes bis hin zu lebhaften Diskussionen bot das Event eine Fülle an Wissen und Networking-Möglichkeiten. Highlights aus dem Programm Die Talks lieferten nicht nur Blicke...

OSCamp für Kubernetes 2024 – Rückblick auf ein inspirierendes Event

Das gestrige Open Source Camp für Kubernetes war ein Riesenerfolg! Von inspirierenden Keynotes bis hin zu lebhaften Diskussionen bot das Event eine Fülle an Wissen und Networking-Möglichkeiten. Highlights aus dem Programm Die Talks lieferten nicht nur Blicke...

OSCamp 2024 | Großen Dank an unsere Sponsoren!

Wir sagen Dankeschön! Die Vorbereitungen für das OSCamp für Kubernetes 2024 sind in vollem Gange, und während wir uns auf das kommende Event freuen, möchten wir die Gelegenheit nutzen, um unseren Gold-Sponsoren zu danken. Ihre großzügige Unterstützung macht diese...

Kritisch: Fehler in Elasticsearch mit JDK22 kann einen sofortigen Stop des Dienstes bewirken

Update Seit gestern Abend steht das Release 8.13.2 mit dem BugFix zur Verfügung. Kritischer Fehler Der Elasticsearch Dienst kann ohne Vorankündigung stoppen. Diese liegt an einem Fehler mit JDK 22. In der Regel setzt man Elasticsearch mit der "Bundled" Version ein....

Monthly Snap März 2024

Endlich Frühling in Nürnberg! Die Laune ist doch morgens gleich besser, wenn es schon hell ist, wenn man aus dem Haus geht. Wir haben im März viele schöne Blogposts für Euch gehabt. Falls Ihr welche davon verpasst hat, hier ein Überblick für Euch. Aber natürlich...

Lösungen & Technology

Kritischer Fehler in Puppet Version 7.29.0 und 8.5.0

Eine Warnung an alle Nutzer von Puppet, aber auch Foreman oder dem Icinga-Installer, die Version 7.29.0 und 8.5.0 von Puppet enthält einen kritischen Fehler, der die Erstellung eines Katalogs und somit die Anwendung der Konfiguration verhindert. Daher stellt bitte...

Kubernetes 101: Die nächsten Schritte

Kibana Sicherheits-Updates: CVSS:Critical

Und täglich grüßt das Murmeltier. Nein nicht ganz. Heute ist es aus der Elastic Stack Werkzeugkiste Kibana, für das es ein wichtiges Sicherheits-Update gibt. Es besteht auf jeden Fall Handlungsbedarf! IMHO auch wenn ihr die "Reporting" Funktion deaktiviert habt. Der...

Events & Trainings

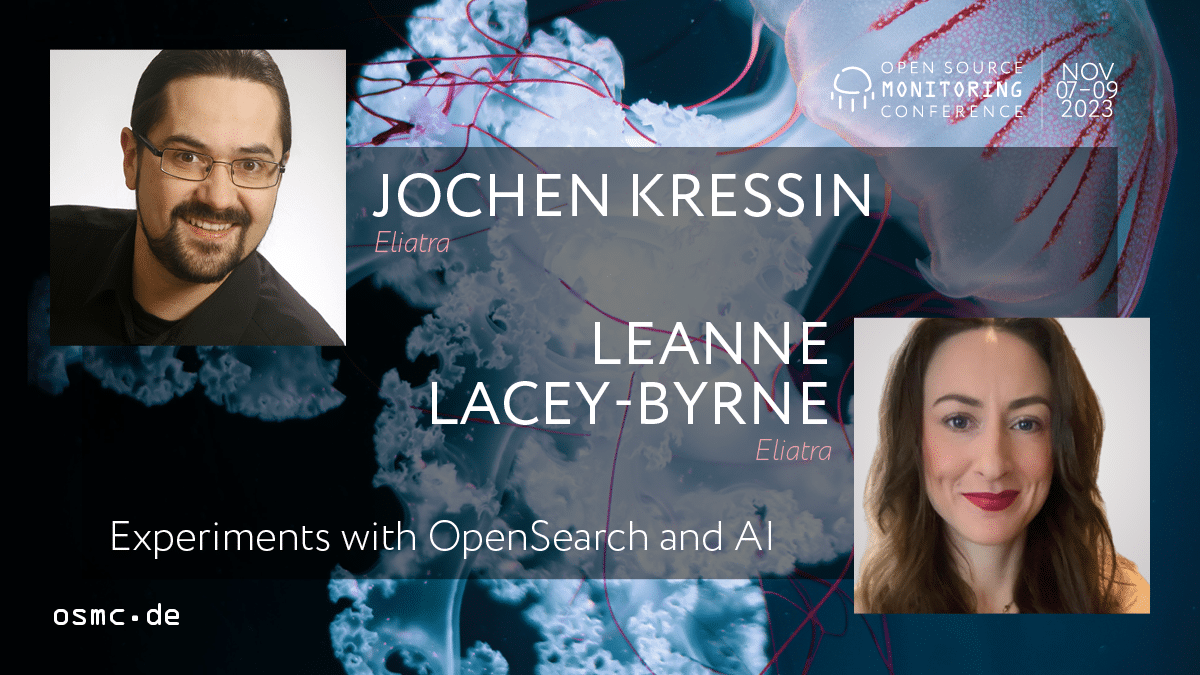

OSMC 2023 | Experiments with OpenSearch and AI

Last year's Open Source Monitoring Conference (OSMC) was a great experience. It was a pleasure to meet attendees from around the world and participate in interesting talks about the current and future state of the monitoring field. Personally, this was my first time...

OSMC 2023 | Experiments with OpenSearch and AI

Last year's Open Source Monitoring Conference (OSMC) was a great experience. It was a pleasure to meet attendees from around the world and participate in interesting talks about the current and future state of the monitoring field. Personally, this was my first time...

OSMC 2023 | Experiments with OpenSearch and AI

Last year's Open Source Monitoring Conference (OSMC) was a great experience. It was a pleasure to meet attendees from around the world and participate in interesting talks about the current and future state of the monitoring field. Personally, this was my first time...

DevOpsDays Berlin 2024 | The Schedule is Online!

We're excited to share that DevOpsDays Berlin is making a comeback this year! After a one-year break, the event will happen on May 7 & 8 in Berlin. And we've got some great news for you! The Program is All Set! Yes, you heard it right. The two-day program...

OSMC 2023 | Will ChatGPT Take Over My Job?

One of the talks at OSMC 2023 was "Will ChatGPT take over my job?" by Philipp Krenn. It explored the changing role of artificial intelligence in our lives, raising important questions about its use in software development. The Rise of AI in Software...

4 Gründe, warum Du Dir das OSCamp für Kubernetes nicht entgehen lassen solltest

Kubernetes ist mehr als nur eine Technologie - es ist eine Revolution in der Art und Weise, wie wir Anwendungen entwickeln, bereitstellen und skalieren. Wenn Du ebenso fasziniert von Kubernetes bist wie wir, dann ist das OSCamp 2024 genau das Richtige für Dich! Hier...

Web Services

Effektive Zugriffskontrolle für GitLab Pages

Grundlagen von GitLab Pages GitLab Pages sind eine facettenreiche Funktion, die es ermöglicht, statische Webseiten direkt aus einem GitLab-Repository heraus zu hosten. Diese Funktionalität eröffnet eine breite Palette von Anwendungsmöglichkeiten, von der Erstellung...

Effektive Zugriffskontrolle für GitLab Pages

Grundlagen von GitLab Pages GitLab Pages sind eine facettenreiche Funktion, die es ermöglicht, statische Webseiten direkt aus einem GitLab-Repository heraus zu hosten. Diese Funktionalität eröffnet eine breite Palette von Anwendungsmöglichkeiten, von der Erstellung...

Effektive Zugriffskontrolle für GitLab Pages

Grundlagen von GitLab Pages GitLab Pages sind eine facettenreiche Funktion, die es ermöglicht, statische Webseiten direkt aus einem GitLab-Repository heraus zu hosten. Diese Funktionalität eröffnet eine breite Palette von Anwendungsmöglichkeiten, von der Erstellung...

Managed BookStack | Deine effiziente Wiki Software

In einer Welt, in der der Zugriff auf Informationen von entscheidender Bedeutung ist, wird die richtige Wiki Software zu einem unverzichtbaren Werkzeug für Unternehmen und Organisationen. Das Organisieren, Teilen und schnelles Finden von Informationen kann den...

NWS-ID for your Managed Kubernetes!

First Cloud, now Kubernetes - the integration of NWS-ID with our portfolio continues! As a next step, we will merge your Managed Kubernetes clusters with NWS-ID. Credit goes to Justin for extending our cluster stack with OpenID-Connect (OIDC), the base for NWS-ID! How...

New at NWS: Managed OpenSearch

We’re happy to announce our brand new product Managed OpenSearch, which we are offering due to our new partnership with Eliatra Suite. Eliatra is your go-to company for OpenSearch, the Elastic Stack and Big Data Solutions based on specialised IT security knowledge....

Unternehmen

OSCamp für Kubernetes 2024 – Rückblick auf ein inspirierendes Event

Das gestrige Open Source Camp für Kubernetes war ein Riesenerfolg! Von inspirierenden Keynotes bis hin zu lebhaften Diskussionen bot das Event eine Fülle an Wissen und Networking-Möglichkeiten. Highlights aus dem Programm Die Talks lieferten nicht nur Blicke...

OSCamp für Kubernetes 2024 – Rückblick auf ein inspirierendes Event

Das gestrige Open Source Camp für Kubernetes war ein Riesenerfolg! Von inspirierenden Keynotes bis hin zu lebhaften Diskussionen bot das Event eine Fülle an Wissen und Networking-Möglichkeiten. Highlights aus dem Programm Die Talks lieferten nicht nur Blicke...

OSCamp für Kubernetes 2024 – Rückblick auf ein inspirierendes Event

Das gestrige Open Source Camp für Kubernetes war ein Riesenerfolg! Von inspirierenden Keynotes bis hin zu lebhaften Diskussionen bot das Event eine Fülle an Wissen und Networking-Möglichkeiten. Highlights aus dem Programm Die Talks lieferten nicht nur Blicke...

Monthly Snap Februar 2024

Der Februar war ein ereignisreicher Monat bei NETWAYS! Neben dem normalen Alltag gab es auch unser Jahresmeeting, ein Spieleabend im Büro, und viele Kollegen waren auf Konferenzen und der Jobmesse in Nürnberg unterwegs. Und natürlich wurden viele Blogposts zu...

NETWAYS stellt sich vor – Irene Hahn

Die erste Anwendung mit Laravel entwickeln – Ein Erfahrungsbericht

Als Auszubildende im 1. Lehrjahr bei NETWAYS hatte ich im Rahmen eines Übungsprojekts die Gelegenheit, meine ersten Erfahrungen in der Webentwicklung zu sammeln. In diesem Beitrag möchte ich beispielhaft die Arbeit mit dem PHP-Framework Laravel teilen. Warum...

Blogroll

Da hast Du einiges zu lesen …

Kritisch: Fehler in Elasticsearch mit JDK22 kann einen sofortigen Stop des Dienstes bewirken

Update

Seit gestern Abend steht das Release 8.13.2 mit dem BugFix zur Verfügung.

Kritischer Fehler

Der Elasticsearch Dienst kann ohne Vorankündigung stoppen. Diese liegt an einem Fehler mit JDK 22. In der Regel setzt man Elasticsearch mit der „Bundled“ Version ein. Diese ist JDK 22 in den aktuellen Versionen. Dies geht aus einem Mailing des Elasticsearch Support-Teams hervor. Das Team entschuldigt sich für den Fehler und es wird mit hochdruck an einem Fix gearbeitet. Auch wird beschrieben, dass es nur sporadisch Auftritt und nicht in der Masse.

Betroffene Versionen:

- Elasticsearch version 7.17.19 , versions 8.13.0/8.13.1 – with JDK 22

Empfehlung:

Da hier ein Datenverlust entstehen kann, sollte gehandelt werden. Wenn es das Einsatzszenario zulässt.

Workaround:

Self-Managed on Premise:Installiert einfach ein JDK Bundle in der Version 21.0.2 und Elasticsearch. Beispiel:-

$ apt install openjdk-21-jdk-headless

Dann müsst ihr nach der Installation auf jeden Knoten einen Neustart des Dienstes durchführen. Denk dabei daran es als „Rolling Restart“ durchzuführen.

-

Für die ES Cloud gibt es keinen Workaround. Hier gibt es Updates zum Services Status: Elasticsearch Instance Disruption on Elasticsearch 8.13Für ECE/ECK (Elastic Clound on Premise / Elastic Cloud Kubernetes): gibt es keinen Workaround

Allgemeine Lösung

Das Team von Elastic arbeitet auf hochtouren an einem Fix für das Thema.

Die aktuellen Stände hierzu könnt ihr hier verfolgen:

Lösung

Natürlich ist es immer noch valide ein Donwgrade durch den Einsatz von OpenJDK durchzuführen wie im „Workaround“ beschrieben. Empfehlenswerter ist es aber alle Elasticsearch-Nodes auf 8.13.2 zu aktualisieren. Da hier wie immer ein Neustart des Dienstes notwendig ist, vergesst nicht nach dem Schema des „Rolling Restart“ zu handeln.

Anmerkung der Redaktion

Hier ist ein handeln Empfohlen. Der Hersteller hat die Schwachstelle erkannt und öffentlich bekannt gegeben, begleitet von Handlungsempfehlungen zur Behebung. Wir haben dies festgestellt und möchten euch mit diesem Beitrag bei der Erkennung und der damit verbundenen Möglichkeit zum Handeln unterstützen. Wenn Ihr unsicher seit, wie ihr reagieren sollt und Unterstützung benötigt, wendet euch einfach an unser Sales-Team. Das Team von Professional Services unterstützt euch gerne.

Monthly Snap März 2024

Endlich Frühling in Nürnberg! Die Laune ist doch morgens gleich besser, wenn es schon hell ist, wenn man aus dem Haus geht. Wir haben im März viele schöne Blogposts für Euch gehabt. Falls Ihr welche davon verpasst hat, hier ein Überblick für Euch. Aber natürlich können alle Beiträge noch mal nachgelesen werden auf unserer Homepage.

End of Life – CentOS Linux 7

Schulungen- Automation und Monitoring

Open Source Camp für Kubernetes

Und was hatten wir noch für Themen?

Katja verbreitete Vorfreude auf das OSCamp für Kubernetes in April und verkündete, dass das Programm der DevOpsDays fest steht, Markus O. gab uns wieder ein GitHub Update, Katja verriet uns die ersten Speaker der stackconf 2024 sowie das Datum der diesjährigen OSMC .Jonada fasste einen Talk der OSMC 2023 zusammen.

NETWAYS GitHub Update März 2024

Willkommen beim NETWAYS GitHub Update, der monatliche Überblick über unsere neuesten Releases.

Wenn du in Zukunft Updates direkt zu Release erhalten willst, folge uns einfach auf GitHub: https://github.com/NETWAYS/

check-system-basics v0.1.4

Changelog

- Besserer Umgang mit Time-outs für Filesystem Checks

- Besserer Umgang mit Fehlern für PSI und Netdev Checks

https://github.com/NETWAYS/check_system_basics/releases/tag/v0.1.4

check-hp-firmware v1.4.0-rc

Changelog

- Bugfix: Fehlerhafte Time-out Berechnung gefixt

- Unbenutzte –ilo Flag entfernt

- iLO6 Support hinzugefügt

- Neue Flags für das deaktivieren von Subchecks hinzugefügt

https://github.com/NETWAYS/check_hp_firmware/releases/tag/v1.4.0-rc1

OSMC 2024 is Calling for Sponsors

What about positioning your brand in a focused environment of international IT monitoring professionals? Discover why OSMC is just the perfect spot for it.

Meet your Target Audience

Sponsoring the Open Source Monitoring Conference is a fantastic opportunity to promote your brand.

Raise corporate awareness, meet potential business partners, and grow your business with lead generation. Network with the constantly growing Open Source community and establish ties with promising IT professionals for talent recruitment. Connect to a diverse and international audience including renowned IT specialists, Systems Administrators, Systems Engineers, Linux Engineers and SREs.

Your Sponsorship Opportunities

Our sponsorship packages are available in a variety of budgets and engagement preferences: Platinum, Gold, Silver, and Bronze.

From an individual booth, speaking opportunities and lead scanning to social media and logo promotion in different ways, everything is possible for you.

We additionally offer some Add-Ons which can be booked separately. Use this unique chance to get even more out of it. Sponsor the Dinner & Drinks event, the Networking Lounge or the Welcome Reception.

Download the sponsor prospectus for full details and pricing.

We look forward to hearing from you!

Early Bird Alert

Our Early Bird ticket sale is already running. Make sure to save your seat at the best price until May 31.

Our discounted tickets are selling fast, grab yours now before they’re gone!

Save the Date

OSMC 2024 is taking place from November 19 – 21, 2024 in Nuremberg. Mark your calendars and be part of the 18th edition of the event!

DevOpsDays Berlin 2024 | The Schedule is Online!

We’re excited to share that DevOpsDays Berlin is making a comeback this year! After a one-year break, the event will happen on May 7 & 8 in Berlin. And we’ve got some great news for you!

The Program is All Set!

Yes, you heard it right. The two-day program for this DevOpsDays 2024 is already sorted out. We’ve got top-notch speakers ready to share their expertise and inspire you. The program includes a mix of talks we’ve carefully selected and discussions you can organize yourself, called „open spaces“. These open spaces let you chat about anything you want, from technical stuff to culture and even board games for networking.

So, take a quick look to see what’s in store for you.

ANJA KUNKEL, Mister Spex

Power structures. The fair advantage

In Anja’s talk, she delves into the complexities of power dynamics within organizations, highlighting how formal structures often fail to capture the nuances of human interactions. She discusses the challenges individuals face when trying to influence decisions and offer strategies for navigating these dynamics fairly, emphasizing the importance of understanding informal networks and relationships within the company.

Andreia Otto & Ravikanth Mogulla, Adidas

Navigating the Transition: SRE Challenges and Highlights in Shifting from Monolith to Microservices at adidas e-commerce

Andreia’s and Ravi’s talk is all about sharing insights they gained from transitioning adidas e-commerce from monolithic architectures to microservices. They highlight challenges such as scaling infrastructure and deployment complexities, while focusing on resilience and observability along the consumer journey. Explore the intricacies of monitoring and incident response, including practical lessons and best practices for maintaining reliability in the microservices paradigm. Gain actionable knowledge to navigate this transformative era effectively.

Timothy Mamo

DevOps Lessons from a Primary School Teacher

In this lecture, Timothy discusses how strategies commonly used by primary school teachers can be applied to the tech industry and DevOps culture. Using the CALMS framework, he highlights the importance of culture and sharing, often overlooked by engineers. By adopting these strategies, we can enhance our day-to-day activities and achieve full CALMS implementation.

Ready to Join the Excitement?

Convinced by the program, speakers, and topics? Fantastic! Now, take the next step to secure your spot at the event. Register now, grab your ticket, and be a part of this thrilling event!

What DevOpsDays are all about

DevOpsDays are a series of technical conferences on topics around the practices, tools and cultural philosophy for integrating processes between software development and IT operations teams. The two-day conference is geared towards developers, sysadmins, and anyone involved in techniques related to DevOps – whether you’re a beginner or an expert.