Cheers from Berlin, Moabit, 11th round for OSDC keeping you in the loop with everything with and around DevOps, Kubernetes, log event management, config management, … and obviously magnificent food and enjoyable get-together.

Goooood mooooorning, Berlin!

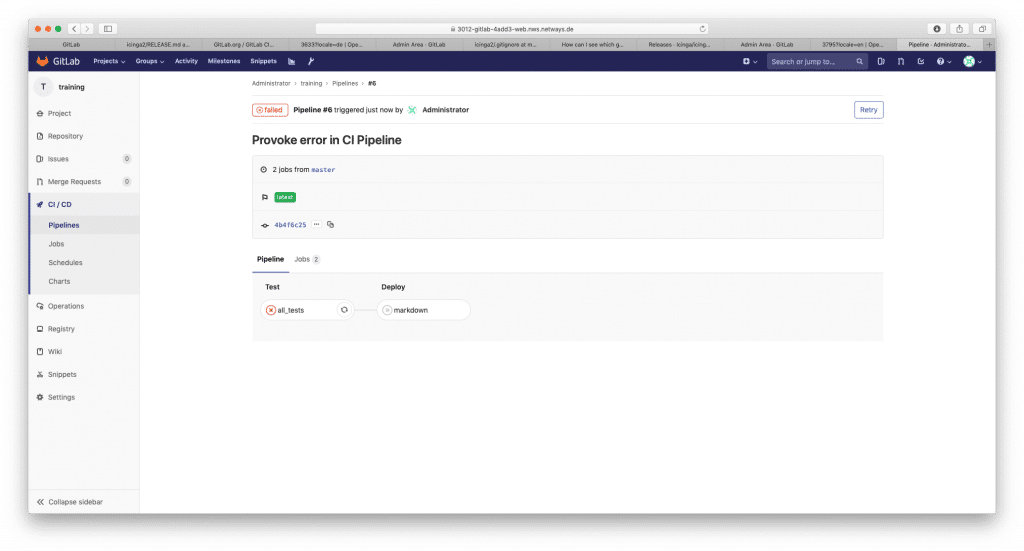

DevOps neither is the question, nor the answer … Arnold Bechtoldt from inovex kicked off OSDC with a provocative talk title. After diving through several problems and scenarios in common environments, we learned to fail often and fail hard, and improve upon. DevOps really is a culture, and not a job title. Also funny – the CV driven development, or when you propose a tool to prepare for the next job 🙂 One key thing I’ve learned – everyone gets the SAME permissions, which is kind of hard with the learned admin philosophy. Well, and obviously we are YAML programmers now … wait … oh, that’s truly inspired by Mr. Buytaert, isn’t it? 😉

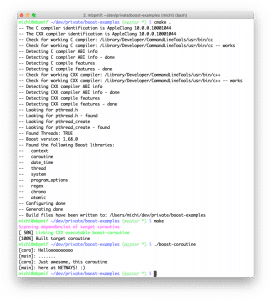

Next up, Nicolas Frankel took us on a journey into logs and scaling at Exoscale. Being not the only developer in the room, he showed off that debug logging with computed results actually eats a lot of resources. Passing the handles/pointers to lazy log function is key here, that reminds me of rewriting the logging backend for Icinga 2 😉 Digging deeper, he showed a UML diagram with the log flow – filebeat collects logs, logstash parses the logs into JSON and Elasticsearch stores that. If you want to go fast, you don’t care about the schema and let ES do the work. Running a query then will be slow, not really matching the best index then – lesson learned. To conclude with, we’ve learned that filebeat actually can parse the log events into JSON already, so if you don’t need advanced filtering, remove Logstash from your log event stream for better performance.

Right before the magnificent lunch, Dan Barker being a chief architect at RSA Security for the Archer platform shared stories from normal production environments to actually following the DevOps spirit. Or, to avoid these hard buzzwords, just like „agile“, and to quote „A former colleague told me: ‚I’ve now understood agile – it’s like waterfall but with shorter steps.'“. He’s also told about important things – you’re not alone, praise your team members publicly.

Something new at OSDC: Ignites

Ignite time after lunch – Werner Fischer challenged himself with a few seconds per slide explaining microcode debugging to the audience, while Time Meusel shared their awesome work within the Puppet community with logs of automation involved (modulesync, etc) at Voxpupuli. Dan Barker really talked fast about monitoring best practices, whereas one shouldn’t put metrics into log aggregation tools and use real business metrics.

The new hot shit

Demo time – James „purpleidea“ Shubin showed the latest developments on mgmt configuration, including the DSL similar to Puppet. Seeing the realtime changes and detecting combined with dynamic processing of e.g. setting the CPU counts really looks promising. Also the sound exaggeration tests with the audience where just awesome. James not only needs hackers, docs writers, testers, but also sponsors for more awesome resource types and data collectors (similar to Puppet facts).

Our Achim „AL“ Ledermüller shared the war stories on our storage system, ranging from commercial netApp to GlusterFS („no one uses that in production“) up until the final destination with Ceph. Addictive story with Tim mimicking the customer asking why the clusterfuck happened again 😉

Kedar Bidarkar from Red Hat told us more about KubeVirt which extends the custom resource definitions available from k8s with the VM type. There are several components involved: operator, api, handler, launcher in order to actually run a virtual machine. If I understand that correctly, this combines Kubernetes and Libvirt to launch real VMs instead of containers – sounds interesting and complicated in the same sentence.

Kubernetes operators the easy way – Matt Jarvis from Mesosphere introduced Kudo today. Creating native Kubernetes operators can become really complex, as you need to know a lot about the internals of k8s. Kudo aims to simplify creating such operators with a universal declarative operator configured via YAML.

Oh, they have food too!

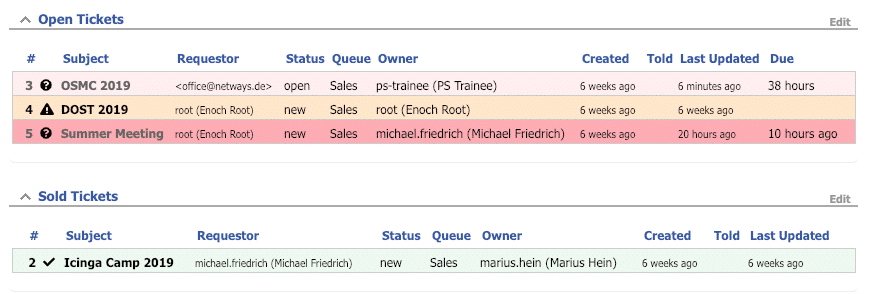

The many coffee breaks with delicious Käsekuchen (or: Kaiser Torte ;)) also invite to visit our sponsor booths too. Keep an eye on the peeps from Thomas-Krenn AG, they have #drageekeksi from Austria with them. We’re now off for the evening event at the Spree river, chatting about the things learnt thus far with a G&T or a beer 🙂

PS: Follow the #osdc stream and NetwaysEvents on Twitter for more, and join us next year!